Describe Ascii and Unicode and Explain the Differences Between Them

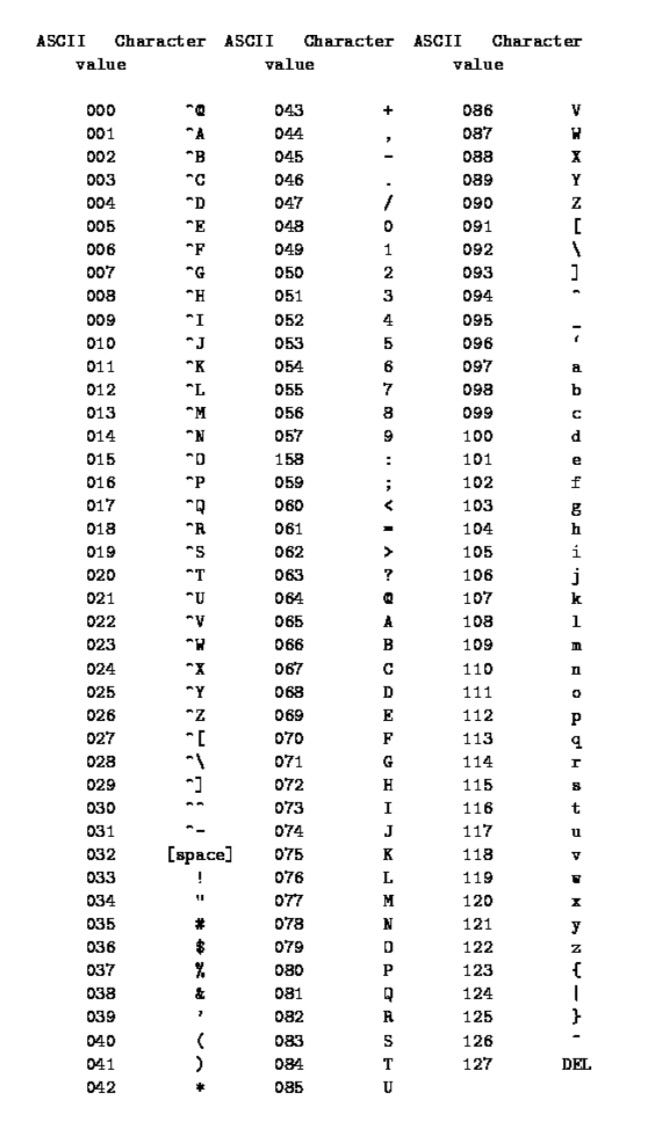

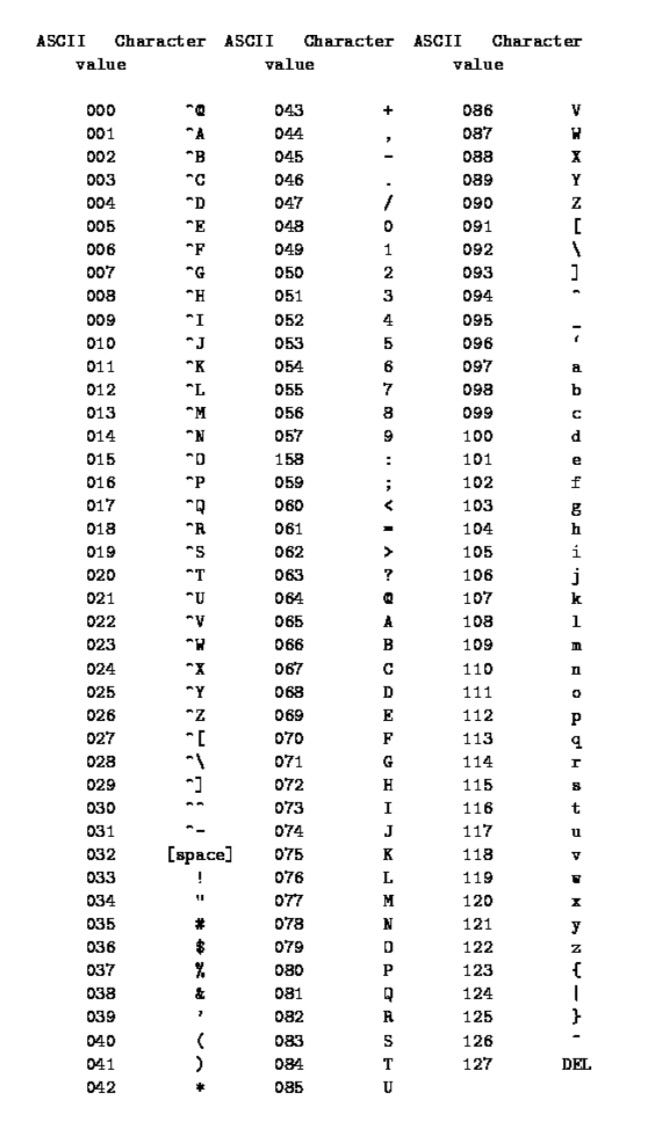

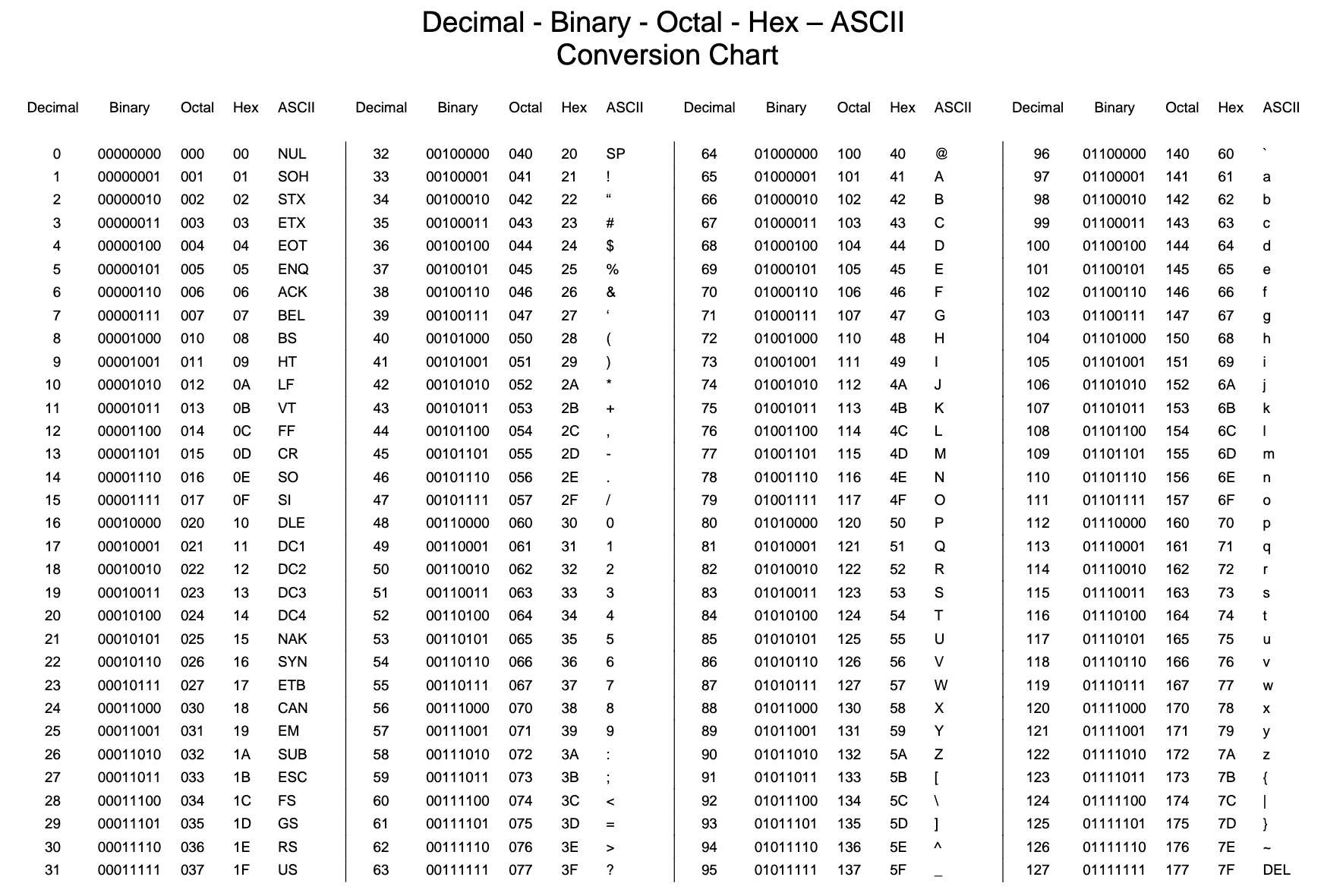

ASCII is a seven-bit encoding technique which assigns a number to each of the 128 characters used most frequently in American English. ASCII is a subset of Unicode in the following sense.

Understanding Ruby String Encoding Ascii Unicode Rubyguides

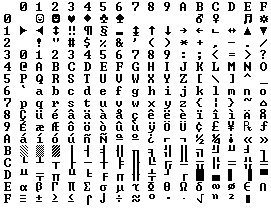

Unicode uses between 8 and 32 bits per character so it can represent characters from languages from all.

. Assume that a graphics file is displayed using a resolution of 120 pixels wide by 300 pixels high and 12 bits per pixel. Unicode is the encoding standard that encodes a large number of characters such as texts and alphabets from other languages even bidirectional texts symbols historical scripts whereas ASCII encodes the alphabets of English language upper case and lower case letters symbols etc. The first 128 characters are the same as.

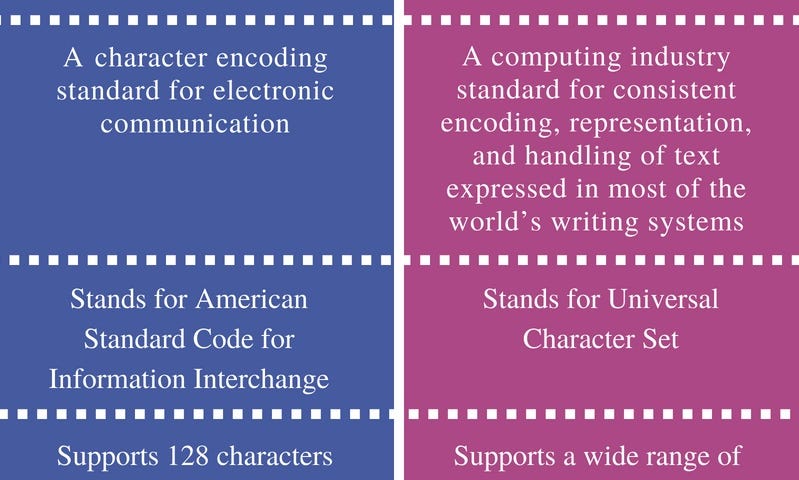

ASCII uses 7 or 8 bits and can represent 128 or 256 characters Unicode uses 16 bits and can represent 216 characters. ASCII is is a character set with 128 different characters and control codes. Both ASCII and Unicode are coded character sets.

Its a set of characters which unlike the characters in word processing documents allow no special formatting like different fonts bold underlined or italic text. The difference between ASCII and Unicode is that ASCII represents lowercase letters a-z uppercase letters A-Z digits 0-9 and symbols such as punctuation marks while Unicode represents letters of English Arabic Greek etc. ASCII stands for American Standard Code for Information Interchange.

Hence it provides a very wide variety of encoding. EBCDIC uses 8 bits to represent a character. The differences between ASCII ISO 8859 and Unicode.

Extended ASCII is useful for European languages. ASCII does not include symbols frequently used in other countries such as the British pound symbol or the. Each number from 0 to 127 represents a character.

Difference Between Encryption and Encoding - GeeksforGeeks For the erasure key of a terminal the 128th ASCII code would. ASCII is computer code for the interchange of information between terminals. Basically they are standards on how to represent difference characters in binary so that they can be written stored transmitted and read in digital media.

Calculate the size of the graphic in terms of bits and bytes. While ASCII uses only 1 byte the Unicode uses 4 bytes to represent characters. ASCII Unicode both are character sets both character sets ASCII Unicode hold a list of characters with unique decimal numbers code points.

ASCII pronounced ask-ee is the acronym for American Standard Code for Information Interchange. Mathematical symbols historical scripts emoji covering a wide range of characters than ASCII. For example the integer 65 represents the character A ie.

Unicode is an expedition of Unicode Consortium to encode every possible languages but ASCII only used for frequent American English encoding. Most other character sets extend ASCII with additional characters so it will use all the 8 bits. ASCII and Unicode are two character encodings.

Difference between Unicode and ASCII. Unicode This encoding standard aims at universality. EBCDIC stands for Extended Binary Coded Decimal Interchange Code.

Unicode on the other hand is a character set with unlimited characters. Unicode works differently than other character sets in that instead of directly coding for. In hexadecimal what quantities are represented by the letters ABEF.

Unicode require more space than ASCII. ASCII stands for American Standard Code for Information Interchange. Number of bits Represent a Character.

There are 32 control characters 94 graphic characters the space character and the delete character. LATIN CAPITAL LETTER A in both ASCII and Unicode. Further ASCII uses 7 bits to represent a character.

ASCII character set contains 128 characters. Unicode is a 16-bit character set which describes all of the keyboard characters. This allows most computers to record and display basic text.

It is a very common acronym in the Unicode scheme. For example ASCII does not use symbol of pound or umlaut. It has three types namely UTF-8 UTF-16 UTF-32.

ASCII stands for American Standards Codes for Information Interchange. The ISO-8859 standard defines extensions of ASCII to 8 bits since computers use 8-bit per byte instead of 7. That differences I knew I am asking what is the differecne between Unicode ASCII text file in SQL Server both are generating sql file and content also same.

This is easily represented in a 8 bit byte with one bit to spare. Unicode is a larger mapping that assigns the same character values to the integers in 0. Among them UTF-8 is used mostly it is also the default encoding for many programming languages.

The ASCII protocol is made up of data that is encoded in ASCII values with minimal control codes added. For example suppose that database A uses the EBCDIC code pages default collating sequence and that database B uses. It currently includes 93 scripts organized in several blocks with many more in the works.

Describe ASCII and Unicode and explain the differences between them. A coded character set consisting of 128 7-bit characters. The main difference between the two is in the way they encode the character and the number of bits that they use for each.

The main difference between ASCII and Unicode is that the ASCII represents lowercase letters a-z uppercase letters A-Z digits 0-9 and symbols such as punctuation marks while the Unicode represents letters of English Arabic Greek etc mathematical symbols historical scripts and emoji covering a wide range of characters than ASCII. ASCII is a 7-bit character set which defines 128 characters numbered from 0 to 127. Unicode used 8bit 16bit or 32bit for encoding large number of characters.

Describe one advantage and one disadvantage of Unicode. Unicode is a standard for encoding most of the worlds writing systems such that every character is assigned a number. A 65 B66 C67 etc.

ASCII is a mapping that assigns certain characters to the integers in 0. Can represent a wider range of characters and therefore more.

Lesson 17 Characters Ocr Gcse Computing

Spring 2012 Cs419 Computer Security Vinod Ganapathy Ssl Etc Cryptography Computer Security Canonical Form

Difference Between Unicode And Ascii With Table Ask Any Difference

This Tutorial Describes The Use Of Python If Else If Elif Else Nested If And Also Covers Decision Ma Python Programming Tutorial Python Programming Tutorials

Difference Between Ascii And Unicode Pediaa Com

Unicode Isn T Harmful For Health Unicode Myths Debunked And Encodings Demystified 10k Loc

The Absolute Minimum Every Software Developer Absolutely Positively Must Know About Unicode And Character Sets No Excuses Joel On Software

Difference Between Ascii And Unicode By Van Vlymen Paws Medium

What S The Difference Between Ascii And Unicode Stack Overflow

Image From Http Www Cl Cam Ac Uk Mgk25 Ucs Unicode Ascii Gif Quotation Marks Ascii Quotations

Solved Discuss The Differences Among Ebcdic Ascii And Unicode Share Your Knowledge And Experience In Using The Different Data Codes Course Hero

What S The Difference Between Ascii And Unicode Stack Overflow

How Computer Print Human Readable Characters Ascii And Unicode By Tanya Mittal Medium

Difference Between Ascii And Unicode By Van Vlymen Paws Medium

What Is A Backspace In Ascii Quora

1 Cryptography And Network Security Third Edition By William Stallings Lecturer Dr Saleem Al Zoubi Cryptography Computer Security Encryption Algorithms

Ascii Code Extended Ascii Characters 8 Bit System And Ansi Code

Comments

Post a Comment